Bonus Clouded Judgement - Inference Time Compute

What a last few days in AI land! DeepSeek came out with R1 and it created huge ripple effects in the tech world, maybe culminating with the DeepSeek app shooting up to #1 in the app store (is this real? The result of bot farms downloading in mass?).

What is DeepSeek? DeepSeek is an AI company that focuses on distilling large, complex AI models into smaller, more efficient versions (while also adding in their own research breakthroughs). These smaller models retain much of the capability of the originals but are cheaper and faster to use. And they released their R1 model last week. R1 is a reasoning model, designed to excel at tasks requiring logical thought, problem-solving, and decision-making. And it’s pretty good!

They say they only spent <$10m on the final training run. It’s important to call out this does not include the research experiments that led to that final successful training run, the failed training runs along the way, the data costs, the capex, etc. There are probably a lot more costs in the true “fully loaded” cost. To make an apples to apples comparison to the state-of-the-art models today, the right comparison would be to compare the figure DeepSeek quotes, to the cost of the successful training run for o1.

This has brought up a lot of questions - Too much to unpack all of it here. So instead, I wanted to spend some time discussing reasoning models and inference-time compute, and why those are really at the heart of the raging debate that R1 kicked off.

Let’s start with reasoning. OpenAI kicked off this paradigm with their o-series of models. o3 is the current state-of-the-art model. Google has Gemini 2.0 Flash Thinking. There will certainly be more. R1 is DeepSeek’s reasoning model.

So, what is a reasoning model? A reasoning model is a model designed to simulate logical thinking - ie reasoning. It doesn’t just recognize patterns or repeat information “learned” during training —it solves problems, and follows chains of thought to arrive at conclusions. Think of it as an AI that doesn’t just "know stuff," but also knows how to figure things out when faced with new problems.

Related to reasoning models is inference-time compute. Inference-time compute is the computational power needed for a model to "think" through a problem and produce an answer, step by step, after it has been trained. It’s the process for the model to process your input, break it down, reason about it, and generate a coherent response.

One way to think about this is to imagine the model as a problem-solver working step by step (a chain of thought). Let’s say you ask it to figure out how many apples a farmer has left after selling some. First, the model reads your question and interprets what it’s asking. Then, it identifies the key details: the number of apples the farmer started with, how many were sold, and what operation needs to be performed. It calculates the answer step by step, checks its work to ensure consistency, and then generates a polished response. I generally like to think of reasoning models as models that “check their work as they go.”

Each part of this process - understanding the question, reasoning through it, and verifying the output - requires compute power. The more complex the task or the more steps involved in the chain of thought, the more compute is needed. This is especially true for reasoning models like o3 and R1, which are designed to tackle multi-step problems, or tasks requiring careful validation.

What’s fascinating about this is that reasoning models don’t just "spit out" answers - they simulate a kind of thinking. They keep track of intermediate steps (chain of thought), check for contradictions, and sometimes even reevaluate their initial approach if something doesn’t seem right along the way. This makes them significantly more compute-intensive during inference compared to simpler models that rely purely on pattern recognition or single-step responses.

As AI applications increasingly require deeper reasoning - think autonomous agents making decisions, chatbots solving complex queries, or systems interacting in real time - the compute demand during inference starts to rival or even exceed what was previously required during training. This shift is central to the paradigm change we’re seeing in AI today.

To date, when we’ve talked about compute needs and being compute-constrained, it’s generally been related to scaling up pre-training. Throw more compute and data in the training phase to make these large models more powerful and capable. Compute was also used for inference, but the majority of the compute went towards training (so far, but that’s really starting to inflect in the other direction).

This is why debates started to rage around Nvidia towards the end of last year as we started to hear more and more about "scaling laws ending." People started to question if you could really just throw more compute at a model to meaningfully improve its performance. And if not - would people’s significant spend on Nvidia continue?

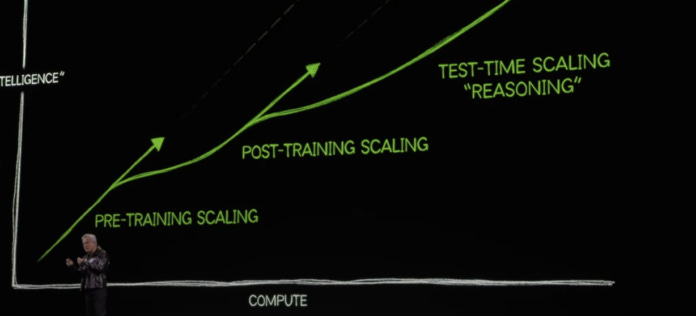

That scaling laws debate is still ongoing today, but I think it’s forcing people to take their eye off the ball on the real question: what are the implications on compute demand as we shift the AI paradigm towards reasoning and inference-time compute? Here’s what Jensen had to say:

His point is that we’re moving to a new paradigm - one dominated by inference time compute (test-time scaling).

Inference-time compute could lead to an explosion of compute demand. As more AI applications go into production, they will require inference compute to serve real-world users in real time. For example, AI-powered agents (programs that autonomously interact with users or systems to complete tasks) are heavily reliant on inference-time compute. The more agents or apps running in parallel, the more steps the agents take, the more compute resources are needed (ie the more thinking and reasoning is needed). This demand grows exponentially as AI proliferates. And it’s important to call out - these reasoning models push a lot of the compute to inference time.

Here’s one of my beliefs: cheaper models (like R1 or anyone else’s) will lead to an EXPLOSION of building with AI. Why not tinker, explore, or prototype more when something becomes cheaper? When something becomes cheaper and more accessible, it naturally leads to more consumption. And in this case, more consumption of cheaper models leads to—you guessed it—more inference-time compute!

If you believe the "scaling laws are ending" debate (I don’t), then you may argue we’ll see a drop in spend on pre-training. However, even in this case, I think this drop gets quickly absorbed and surpassed by the incremental demand inference-time compute creates. If all the models get cheaper, demand will go up (ie more inference). There is a timing question, however, on how long it would take for inference-time compute to "make up the difference." Personally, I don’t think we’ll see the large AI labs slow their spend, because I don’t think scaling laws are ending (at the end of the day, R1 wouldn’t have existed without the OpenAI models, given the distillation). And I think we’ll simultaneously see inference-time compute start to skyrocket.

I do, however, think there is now a new debate—or phrased differently, a new dimension of the same debate. These smaller/distilled models are generally more efficient. They have fewer layers and fewer parameters. Smaller models take less compute at inference time because they’re processing fewer calculations to arrive at an answer. This means they’re cheaper and faster to use in real-world applications compared to their larger counterparts. However, larger models tend to be more powerful and accurate, especially for complex tasks.

So, I do think there’s a debate around whether the future will look more concentrated around smaller, domain-specific distilled models, or large, more powerful models. And this isn’t an opinion on AI Labs vs small players. AI Labs can easily release smaller, distilled versions of their own models.

I’ve yet to form an opinion on the small model vs. large model debate, but I think the answer lies somewhere in between. We don’t have any of the progress to date without the large models. So, I don’t think that trajectory changes, but I do think the smaller, domain-specific models will play an increasing role. End of the day, I think it’s a rising tide for all. No different in the real world - whether you’re buying a car or hiring an engineer, there are “budget” options and there are premium options. Both have a market, and there are pros and cons and implications of each. If we do end up more in the smaller model world, that just means the end products are cheaper, which probably drives up more demand, which in turn drives up more compute demand. One thing I do feel convinced by - the pendulum will continue to swing. Soon we’ll probably be wowed by releases from the large AI labs, and the pendulum will swing back to the “large models will win.” And then small models will have a breakthrough. And the cycle will repeat :)

I do think there’s a future where a number of things shift. Will the large AI labs stop releasing their full, large models and instead release smaller, distilled versions? Will every vendor move towards open-sourcing their models? Will the "product" from the AI labs shift from the model (API) as the product to "products built around the model" as the product? Meta started applying pressure when they open-sourced Llama. DeepSeek took it to the next level with R1 (and their other models). There’s very little / no room for closed source models that aren’t state of the art. The future is dynamic, and it’s never been more fun to dive in and research. All I know is AI is becoming more powerful at the same time it’s becoming more accessible. That’s a great thing!

Are the computer chips that are used to pre-train an AI model the same type of chips that are used for inference?

My JPM banker could not explain why all of my boring energy and industrial stocks got pummeled in 1 day. This is a super helpful read into what we do and do not know. It's wild that you invest in a portfolio with "limited tech exposure" that ends up being 75% exposed to tech via AI compute costs (or potential lack thereof).