Clouded Judgement 11.10.23 - OpenAI Updates + Datadog Gives the All-Clear?

Every week I’ll provide updates on the latest trends in cloud software companies. Follow along to stay up to date!

OpenAI Updates

OpenAI had their big developer day this week, and I wanted to call out two key announcements (and trends): increasing context windows and decreasing costs.

When I think about the monetization of AI (and which “layers” monetize first) I’ve always thought it would follow the below order, with each layer lagging the one that comes before it.

Raw silicon (chips like Nvidia bought in large quantities to build out infra to service upcoming demand).

Model providers (OpenAI, Anthropic, etc as companies start building out AI).

Hyperscalers (AWS, Azure, GCP as companies look for cloud GPUs who aren’t building out their own data centers)

Infra (Data layer, orchestration, monitoring, ops, etc)

Durable Applications

We’ve clearly well underway of the first 3 layers monetizing. Just starting the fourth layer, with the fifth layer showing up in some pockets, but not really widespread monetization (and I should clarify - scalable monetization). The caveat is important - I’ve heard of a well known company that had an offshore team handling lots of manual customer work (ie responses). And this “product” had a ~50% gross margin. When they started using large language models from OpenAI, the gross margin on the same product went to -100%! (yes, that’s negative 100%). While the product was “monetizing” I wouldn’t count it as scalable monetization.

We haven’t quite yet cracked AI used in production in widespread fashion. There are many limiters here - data security and compliance are big ones. But even more important right now is cost. At the end of the day, these large language models are quite expensive! And as a vendor using them, you can either pass through the costs to your end customer (to maintain your gross margins), or eat the costs and lower your gross margins (because the customer isn’t willing to pay the incremental cost for incremental functionality brought about by AI), and hope the model providers lower their costs in the future. It seems like every company has been experimenting. Saying things like “just build out all the AI functionality now and then we’ll evaluate if customers will pay for it.” Now that we’re getting through this initial wave of experimentation and AI buildout, there’s quite a bit of sticker shock when the OpenAI bills come due! People are looking to build in model portability to enable them to switch to lower cost models (or open source).

This brings me back to the initial point - the two announcements from OpenAI I want to highlight here.

Context length: Context window of GPT 4 Turbo went from 8k tokens to 128k tokens (think of this as ~300 pages of text worth of input). This means what you can put into a prompt just went up dramatically

Costs decreasing: GPT 4 Turbo is 3x cheaper for input tokens (think of this as roughly the length of the prompt) and 2x cheaper for output tokens. This equates to $0.01 per 1k input tokens, and $0.03 per 1k output tokens. On a blended basis, GPT 4 Turbo is roughly 2.5-3x cheaper than GPT 4.

The cost decrease is very meaningful - it’s lowers the barrier to experiment with AI, and also lowers the barrier for these AI functionalities to be pushed into production (because vendors don’t have to increase price nearly as much). Also - As Moin pointed out on Twitter / X, as context windows increase the need for task / domain-specific models (or fine-tuned models) decreases. The counter argument to this is will we be able to find enough high quality long context training data. Either way - it’s clear these models are becoming cheaper and more effective, which is an exciting future for AI! I think we’re about to see an explosion of good business model AI applications in the near future. 2024 will be the year of AI applications!

Datadog Gives Software the All Clear?

This week software stocks shot up on Tuesday, largely a result of Datadog’s quarterly earnings. Datadog in particular was up ~30%. So what happened? They made a number of comments about optimizations easing up, and the worst being behind us. Here are some quotes:

“It looks like we've hit an inflection point. It looks like there's a lot less overhang now in terms of what needs to be optimized or could be optimized by customers. It looks like also optimization is less intense and less widespread across the customer base.”

“We had a very healthy start to Q4 in October...the trends we see in early Q4 are stronger than they've been for the past year.”

“As we look at our overall customer activity, we continue to see customers optimizing but with less impact than we experienced in Q2, contributing to our usage growth with existing customers improving in Q3 relative to Q2.”

“As a reminder, last quarter, we discussed a cohort of customers who began optimizing about a year ago and we said that they appear to stabilize their users growth at the end of Q2. That trend has held for the past several months with that cohorts usage remaining stable throughout Q3.”

Datadog was one of the first companies to really highlight an improving macro environment. And even more important, they called out a great month of October (first month of Q4 for them). So how do we contrast their positive commentary, with largely neutral commentary from the rest of the software universe? Most likely Datadog is seeing trends more unique to their own business. As the market puts a greater emphasis on bundled platforms today vs point solutions, they appear to be an incremental winner of market share. Best of breed platforms (with more of a usage based model) will recover first (in terms of revenue growth recovery). Datadog appears to be in that bucket and recovering first. This doesn’t mean the rest of the software universe will follow suite. There will be many “pretenders” who never recover and find themselves bundled into oblivion. However, the positive commentary from Datadog is the first sign that we’re starting to turn a corner. So while the rest of the software universe may not be at that corner today, we’re starting to see the light at the end of the tunnel.

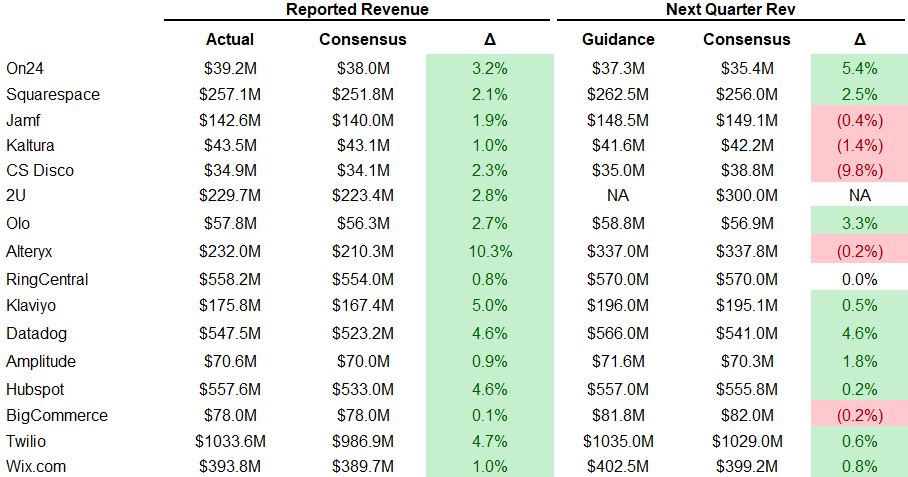

Quarterly Reports Summary

Top 10 EV / NTM Revenue Multiples

Top 10 Weekly Share Price Movement

Update on Multiples

SaaS businesses are generally valued on a multiple of their revenue - in most cases the projected revenue for the next 12 months. Revenue multiples are a shorthand valuation framework. Given most software companies are not profitable, or not generating meaningful FCF, it’s the only metric to compare the entire industry against. Even a DCF is riddled with long term assumptions. The promise of SaaS is that growth in the early years leads to profits in the mature years. Multiples shown below are calculated by taking the Enterprise Value (market cap + debt - cash) / NTM revenue.

Overall Stats:

Overall Median: 5.0x

Top 5 Median: 14.5x

10Y: 4.6%

Bucketed by Growth. In the buckets below I consider high growth >30% projected NTM growth, mid growth 15%-30% and low growth <15%

High Growth Median: 11.8x

Mid Growth Median: 7.4x

Low Growth Median: 3.9x

EV / NTM Rev / NTM Growth

The below chart shows the EV / NTM revenue multiple divided by NTM consensus growth expectations. So a company trading at 20x NTM revenue that is projected to grow 100% would be trading at 0.2x. The goal of this graph is to show how relatively cheap / expensive each stock is relative to their growth expectations

EV / NTM FCF

The line chart shows the median of all companies with a FCF multiple >0x and <100x. I created this subset to show companies where FCF is a relevant valuation metric.

Companies with negative NTM FCF are not listed on the chart

Scatter Plot of EV / NTM Rev Multiple vs NTM Rev Growth

How correlated is growth to valuation multiple?

Operating Metrics

Median NTM growth rate: 15%

Median LTM growth rate: 21%

Median Gross Margin: 75%

Median Operating Margin (18%)

Median FCF Margin: 8%

Median Net Retention: 114%

Median CAC Payback: 35 months

Median S&M % Revenue: 42%

Median R&D % Revenue: 26%

Median G&A % Revenue: 17%

Comps Output

Rule of 40 shows rev growth + FCF margin (both LTM and NTM for growth + margins). FCF calculated as Cash Flow from Operations - Capital Expenditures

GM Adjusted Payback is calculated as: (Previous Q S&M) / (Net New ARR in Q x Gross Margin) x 12 . It shows the number of months it takes for a SaaS business to payback their fully burdened CAC on a gross profit basis. Most public companies don’t report net new ARR, so I’m taking an implied ARR metric (quarterly subscription revenue x 4). Net new ARR is simply the ARR of the current quarter, minus the ARR of the previous quarter. Companies that do not disclose subscription rev have been left out of the analysis and are listed as NA.

Sources used in this post include Bloomberg, Pitchbook and company filings

The information presented in this newsletter is the opinion of the author and does not necessarily reflect the view of any other person or entity, including Altimeter Capital Management, LP ("Altimeter"). The information provided is believed to be from reliable sources but no liability is accepted for any inaccuracies. This is for information purposes and should not be construed as an investment recommendation. Past performance is no guarantee of future performance. Altimeter is an investment adviser registered with the U.S. Securities and Exchange Commission. Registration does not imply a certain level of skill or training.

This post and the information presented are intended for informational purposes only. The views expressed herein are the author’s alone and do not constitute an offer to sell, or a recommendation to purchase, or a solicitation of an offer to buy, any security, nor a recommendation for any investment product or service. While certain information contained herein has been obtained from sources believed to be reliable, neither the author nor any of his employers or their affiliates have independently verified this information, and its accuracy and completeness cannot be guaranteed. Accordingly, no representation or warranty, express or implied, is made as to, and no reliance should be placed on, the fairness, accuracy, timeliness or completeness of this information. The author and all employers and their affiliated persons assume no liability for this information and no obligation to update the information or analysis contained herein in the future.

Thank you for your interesting thoughts regarding the monetization layers of AI. Really inspiring!

Good stuff as always. Your point about the reduced costs per token is interesting and hopefully allows companies to experiment with AI more in their workflows