Clouded Judgement 1.2.26 - Authority Is the AI Bottleneck

Every week I’ll provide updates on the latest trends in cloud software companies. Follow along to stay up to date!

Authority Is the AI Bottleneck

Every AI conversation eventually drifts toward the same set of questions. Which model should we use. Build or buy. How much will inference cost. What happens to accuracy at scale. These are all real questions, but they are also strangely orthogonal to whether AI actually changes anything meaningful inside a company. You can answer every one of them well and still end up with an AI strategy that feels impressive in demos and immaterial in practice.

There is a more important decision sitting underneath all of this, and most companies are not talking about it directly. They are making it implicitly, often without realizing it. That decision is whether AI is allowed to be authoritative or merely assistive.

Most enterprise AI today is firmly in the assistive bucket. AI drafts the email, but a human sends it. AI suggests the next action, but a human approves it. AI flags a risk, but a human decides what to do. AI summarizes the ticket, the contract, the account, the customer, and then waits patiently for someone to act. This is the comfortable version of AI. It feels safe. It feels controllable. It also feels productive in a very local sense. People save time. Work moves a bit faster. Everyone can point to usage charts going up and feel good about progress.

What rarely happens in this mode is a step change in outcomes. Costs do not collapse. Cycle times do not fundamentally reset. Headcount plans do not bend. The organization still runs at the speed of human review, human judgment, and human bottlenecks. The AI is helpful, but it is not decisive.

That distinction turns out to matter more than almost anything else.

When AI is assistive, it improves efficiency at the margin. When AI is authoritative, it rewrites the workflow. The moment software is allowed to act instead of suggest, entire layers of process either disappear or get reshaped. Decisions happen continuously instead of episodically. Exceptions become the focus rather than the norm. Cost structures start to look different. The ROI that everyone is searching for finally has somewhere to show up.

This is also the point where things get uncomfortable, which is exactly why so many organizations stop short of crossing that line.

Allowing AI to be authoritative forces a series of hard questions that have nothing to do with models. Where does the truth live. Which system is canonical when two sources disagree. What level of error is acceptable, and compared to what baseline. Who is accountable when software makes the wrong call. How do you roll back decisions that were executed automatically rather than reviewed manually. These are not AI questions in the narrow sense. They are organizational questions that AI makes impossible to avoid.

It is much easier to keep AI in an advisory role and declare victory. You can ship features. You can talk about adoption. You can avoid rethinking how work actually gets done. But you also cap the upside. An assistive system still depends on human attention to move forward. And human attention is exactly the scarce resource companies are trying to escape.

This is why so many AI initiatives feel stuck in a strange middle ground. They are clearly useful. They are often loved by users. And yet they fail to produce the kind of economic impact that was promised. The problem is not that the AI is bad. The problem is that it has no authority.

There is a pattern emerging among the teams that are seeing outsized returns from AI. They are not necessarily using more advanced models. They are not always spending more on infrastructure. What they are doing differently is deciding, explicitly, where software is allowed to take responsibility. They pick narrow domains. They define tight guardrails. They invest heavily in sources of truth. And then they let the system act.

Once that decision is made, everything downstream looks different. The architecture matters more. Data quality stops being a talking point and becomes existential. Observability and rollback move from nice to have to mandatory. Trust becomes something you engineer rather than something you hope for. The work is harder, but it compounds.

Most companies will not make this leap all at once. That is fine. Authority does not have to be absolute. But it does have to be real somewhere. Until an AI system is allowed to own an outcome end to end, it will always feel like a productivity tool rather than a transformational one.

The irony is that the biggest AI decision companies face has very little to do with AI at all. It is a decision about control. About trust. About whether software is allowed to do more than whisper suggestions into a human ear. Assistive AI saves time. Authoritative AI changes outcomes. And that line, more than any model choice or benchmark score, is where the real value starts to show up. Many legacy software vendors will take comfort that their software is “trusted.” However, over time, trust will build in AI systems. Just like it took quite some time for people to trust the cloud (is it secure, is it performant, can I control it, etc), it will take time for people to trust AI systems. But once they do, the floodgates open.

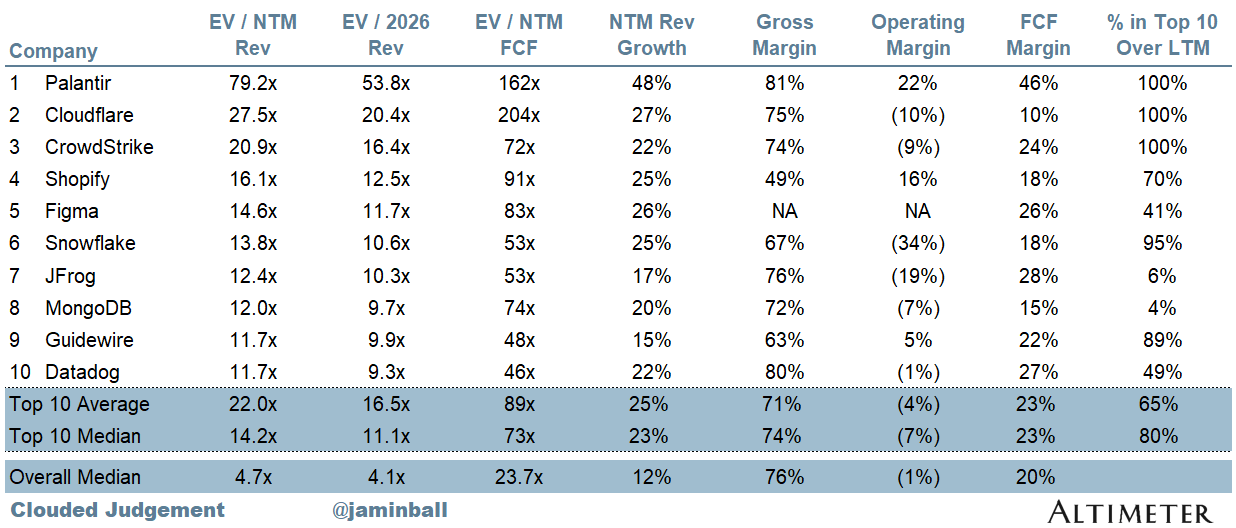

Top 10 EV / NTM Revenue Multiples

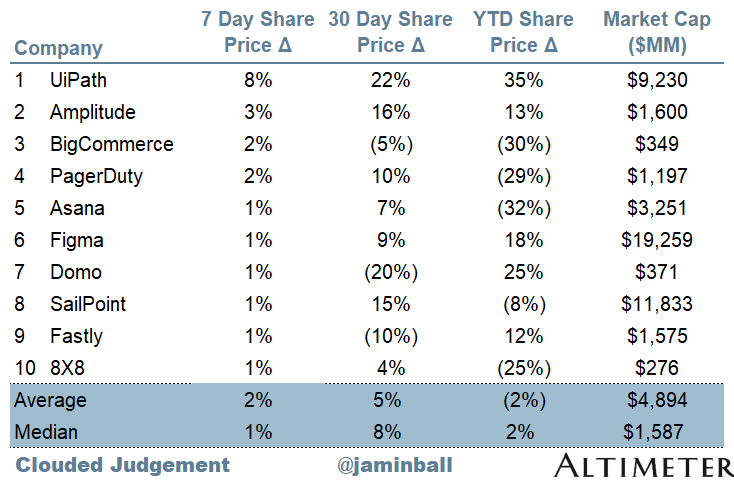

Top 10 Weekly Share Price Movement

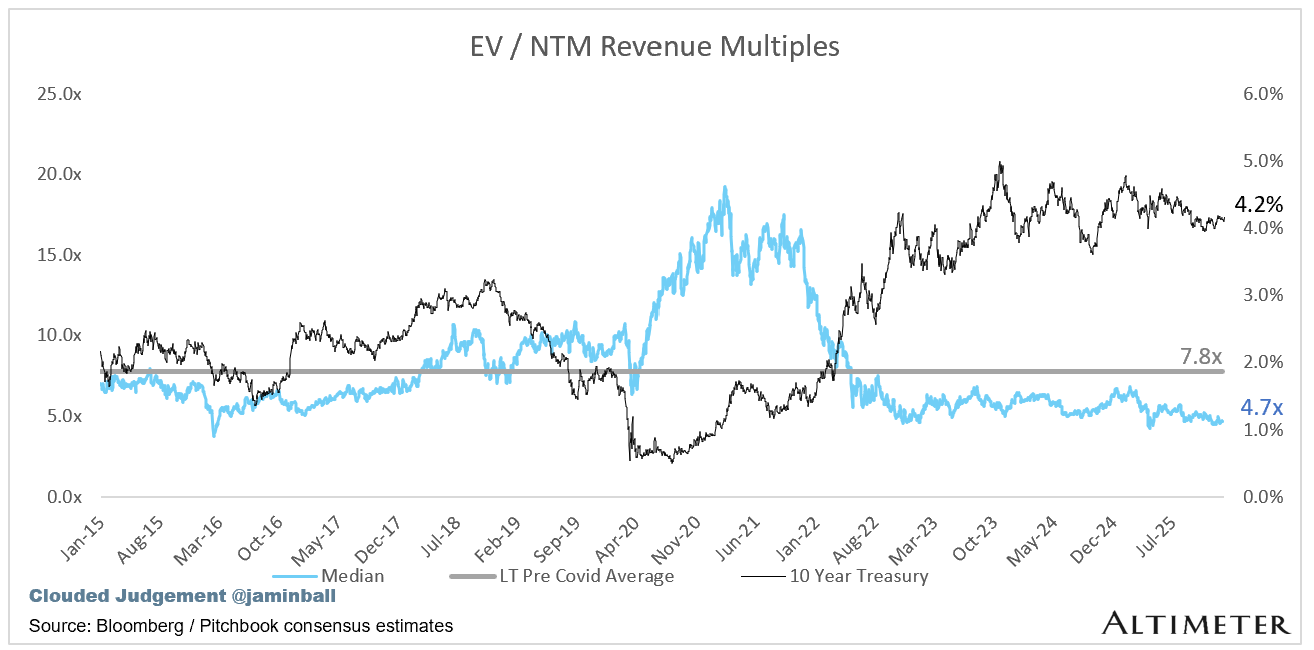

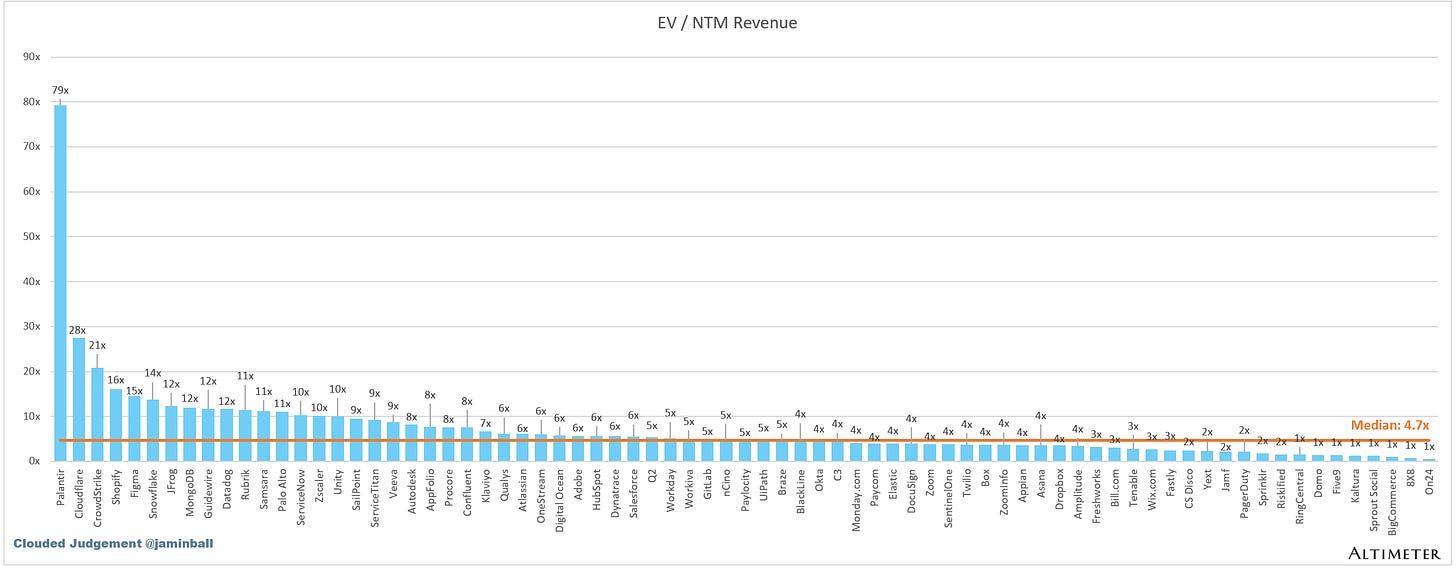

Update on Multiples

SaaS businesses are generally valued on a multiple of their revenue - in most cases the projected revenue for the next 12 months. Revenue multiples are a shorthand valuation framework. Given most software companies are not profitable, or not generating meaningful FCF, it’s the only metric to compare the entire industry against. Even a DCF is riddled with long term assumptions. The promise of SaaS is that growth in the early years leads to profits in the mature years. Multiples shown below are calculated by taking the Enterprise Value (market cap + debt - cash) / NTM revenue.

Overall Stats:

Overall Median: 4.7x

Top 5 Median: 20.9x

10Y: 4.2%

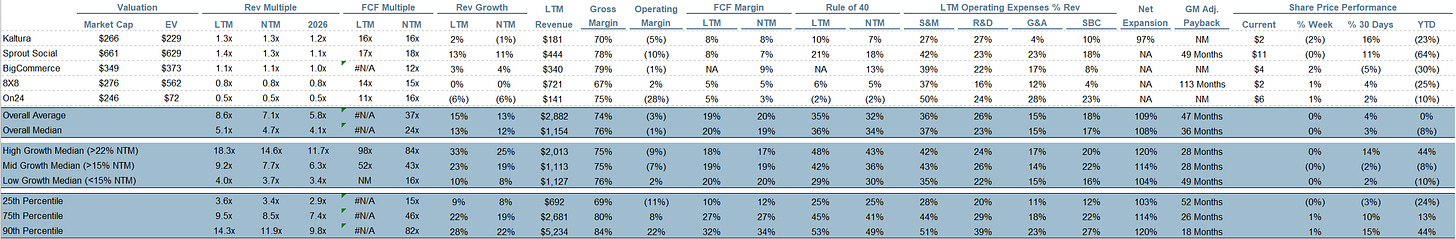

Bucketed by Growth. In the buckets below I consider high growth >22% projected NTM growth, mid growth 15%-22% and low growth <15%. I had to adjusted the cut off for “high growth.” If 22% feels a bit arbitrary, it’s because it is…I just picked a cutoff where there were ~10 companies that fit into the high growth bucket so the sample size was more statistically significant

High Growth Median: 14.6x

Mid Growth Median: 7.7x

Low Growth Median: 3.7x

EV / NTM Rev / NTM Growth

The below chart shows the EV / NTM revenue multiple divided by NTM consensus growth expectations. So a company trading at 20x NTM revenue that is projected to grow 100% would be trading at 0.2x. The goal of this graph is to show how relatively cheap / expensive each stock is relative to its growth expectations.

EV / NTM FCF

The line chart shows the median of all companies with a FCF multiple >0x and <100x. I created this subset to show companies where FCF is a relevant valuation metric.

Companies with negative NTM FCF are not listed on the chart

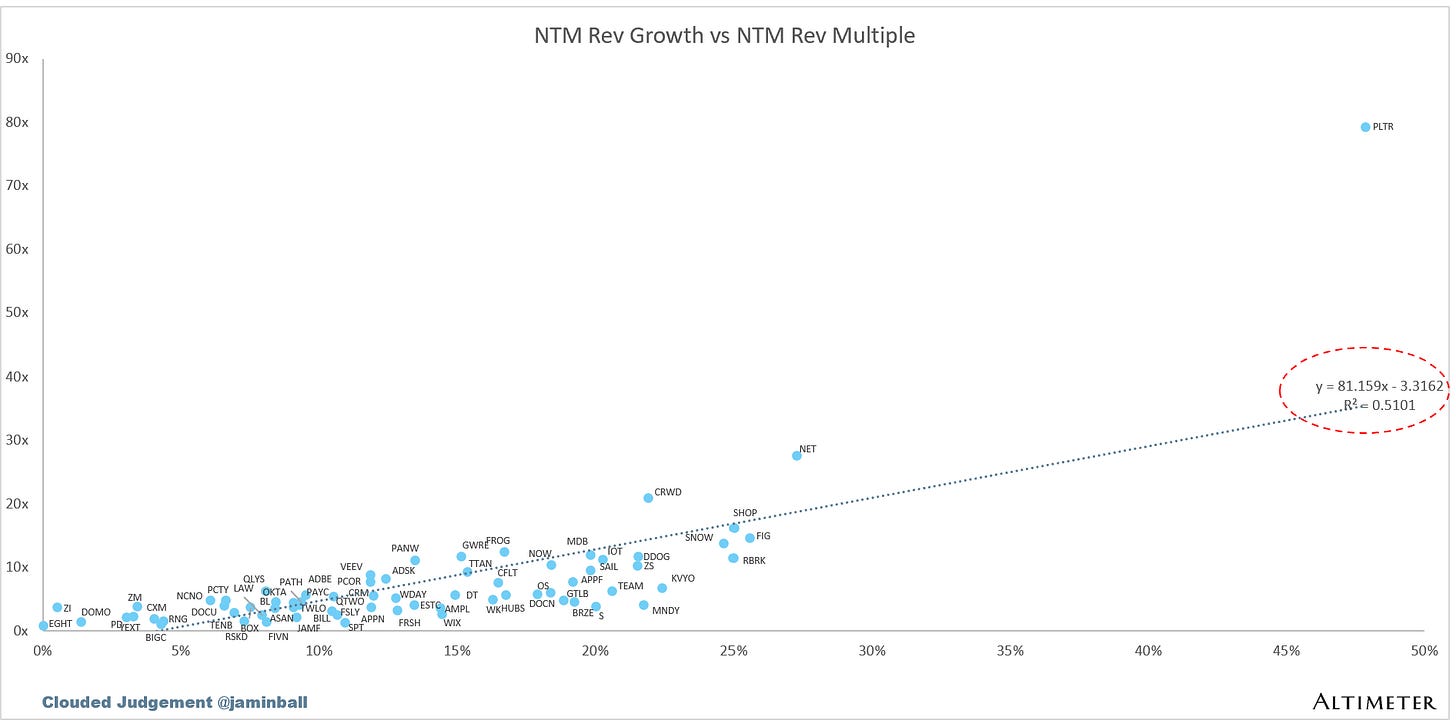

Scatter Plot of EV / NTM Rev Multiple vs NTM Rev Growth

How correlated is growth to valuation multiple?

Operating Metrics

Median NTM growth rate: 12%

Median LTM growth rate: 13%

Median Gross Margin: 76%

Median Operating Margin (1%)

Median FCF Margin: 19%

Median Net Retention: 108%

Median CAC Payback: 36 months

Median S&M % Revenue: 37%

Median R&D % Revenue: 23%

Median G&A % Revenue: 15%

Comps Output

Rule of 40 shows rev growth + FCF margin (both LTM and NTM for growth + margins). FCF calculated as Cash Flow from Operations - Capital Expenditures

GM Adjusted Payback is calculated as: (Previous Q S&M) / (Net New ARR in Q x Gross Margin) x 12. It shows the number of months it takes for a SaaS business to pay back its fully burdened CAC on a gross profit basis. Most public companies don’t report net new ARR, so I’m taking an implied ARR metric (quarterly subscription revenue x 4). Net new ARR is simply the ARR of the current quarter, minus the ARR of the previous quarter. Companies that do not disclose subscription rev have been left out of the analysis and are listed as NA.

Sources used in this post include Bloomberg, Pitchbook and company filings

The information presented in this newsletter is the opinion of the author and does not necessarily reflect the view of any other person or entity, including Altimeter Capital Management, LP (”Altimeter”). The information provided is believed to be from reliable sources but no liability is accepted for any inaccuracies. This is for information purposes and should not be construed as an investment recommendation. Past performance is no guarantee of future performance. Altimeter is an investment adviser registered with the U.S. Securities and Exchange Commission. Registration does not imply a certain level of skill or training. Altimeter and its clients trade in public securities and have made and/or may make investments in or investment decisions relating to the companies referenced herein. The views expressed herein are those of the author and not of Altimeter or its clients, which reserve the right to make investment decisions or engage in trading activity that would be (or could be construed as) consistent and/or inconsistent with the views expressed herein.

This post and the information presented are intended for informational purposes only. The views expressed herein are the author’s alone and do not constitute an offer to sell, or a recommendation to purchase, or a solicitation of an offer to buy, any security, nor a recommendation for any investment product or service. While certain information contained herein has been obtained from sources believed to be reliable, neither the author nor any of his employers or their affiliates have independently verified this information, and its accuracy and completeness cannot be guaranteed. Accordingly, no representation or warranty, express or implied, is made as to, and no reliance should be placed on, the fairness, accuracy, timeliness or completeness of this information. The author and all employers and their affiliated persons assume no liability for this information and no obligation to update the information or analysis contained herein in the future.

"It will take time for people to trust AI systems. But once they do, the floodgates open."

That is ultimately a problem with the models though.

Yes Deal Director, having lived through maybe 5 of these cycles, adoption comes in two phases. The first phase is where big buyers use new technology to do old things in safely new ways. This preserves power structures but accomplishes little. Then true attackers, using the new technology to fundamentally alter business processes and maybe the businesses themselves, gut the old business models and get very rich. We are in phase one, waiting on phase two. That is when the fun begins.